Amazon Simple Storage Service (Amazon S3) is an indefinitely scalable object storage service. Besides the unmatched scalability, its main perks include superior data availability, security, and performance. The versatility in targeted audiences is part of the uniqueness of S3 as a service, spanning virtually any presented business value including backups, media hosting, data lakes, and websites.

Within itself, S3 services are offered with various cost-effective storage classes with user-friendly management. This variety of options targets spanning as many use cases as possible with management features allowing optimization, organization of data, and configuring access models to seamlessly cut through any unique enterprise requirement.

Objects such as data, metadata tags, or unique IDs are stored in object storage as opposed to file storage. In object storage, these objects are stored in a flat address space allowing the storage of data across regions and providing infinite scalability. This functionality allows users to simply add more storage nodes when required. Since each object can be potentially stored on a number of storage nodes, it provides significantly higher parallel throughput than file storage.

On an average AWS account, S3 costs are the third largest costs after EC2 and RDS costs. Identifying and taking action on the following types of unused S3 objects can help reduce unnecessary costs:

Incomplete Multipart Uploads

S3 allows users to upload objects as a set of parts. Each of these parts represents a portion of the data that the said object contains, and by dividing the load into parts, the service is providing improved throughput, quick recovery, and increased resiliency. This feature is recommended for any file larger than 100MB.

After the upload of parts is complete, S3 assembles them together forming the original object that the user is trying to upload. This way, any possible transient network issues won’t cause permanent damage to the files uploaded. Some of the additional perks include the ability to pause and resume object uploads and initiate uploads even before the object is completed.

Opportunity to Optimize

When all components of a multi-part upload are not successfully uploaded, S3 does not assemble the parts, meaning it does not create an S3 object, and ends up keeping the successfully uploaded parts. Amazon S3 Storage Lens can be used to assess the volume of incomplete multipart uploads being stored in S3, and the S3 Storage Lifecycle can be used to address the excess volume. In short, the user can leverage the AWS Management Console or AWS Command Line Interface (CLI) to create a “delete-incomplete-mpu-7-days” lifecycle rule targeting parts of S3 objects that were never assembled into one full object.

DIY

To assess the scale of this problem, there are two approaches:

S3 Storage Lifecycle approach

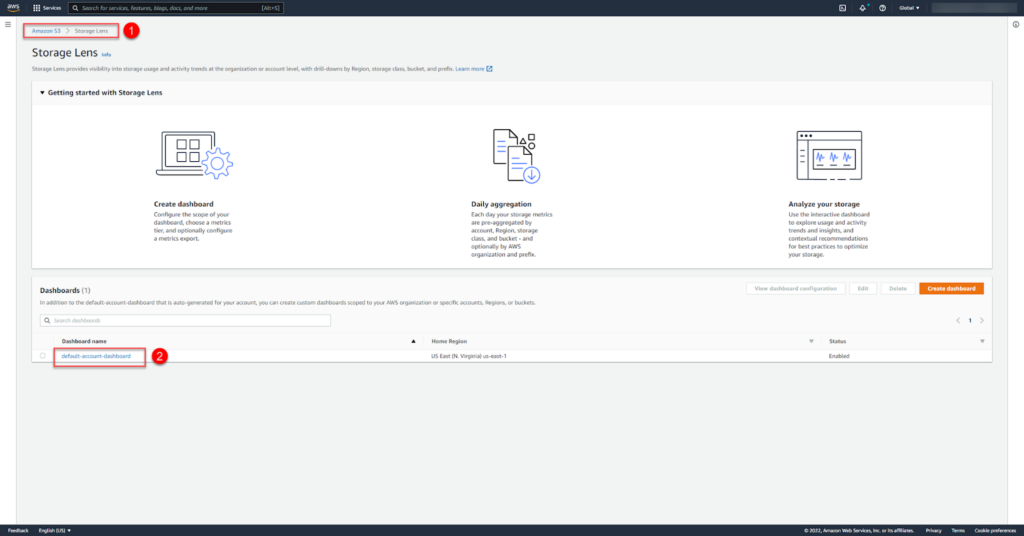

- Open the Amazon S3 Storage Lens

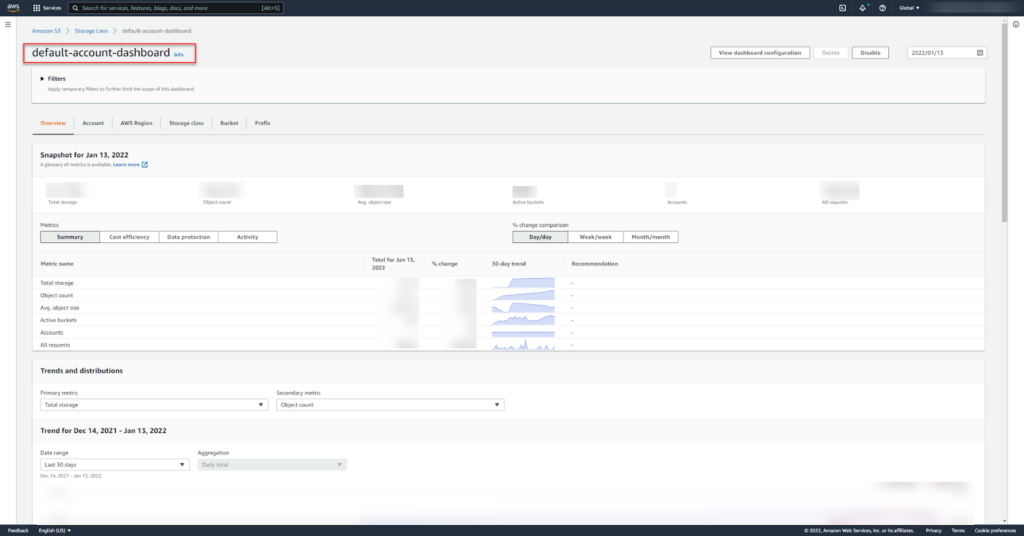

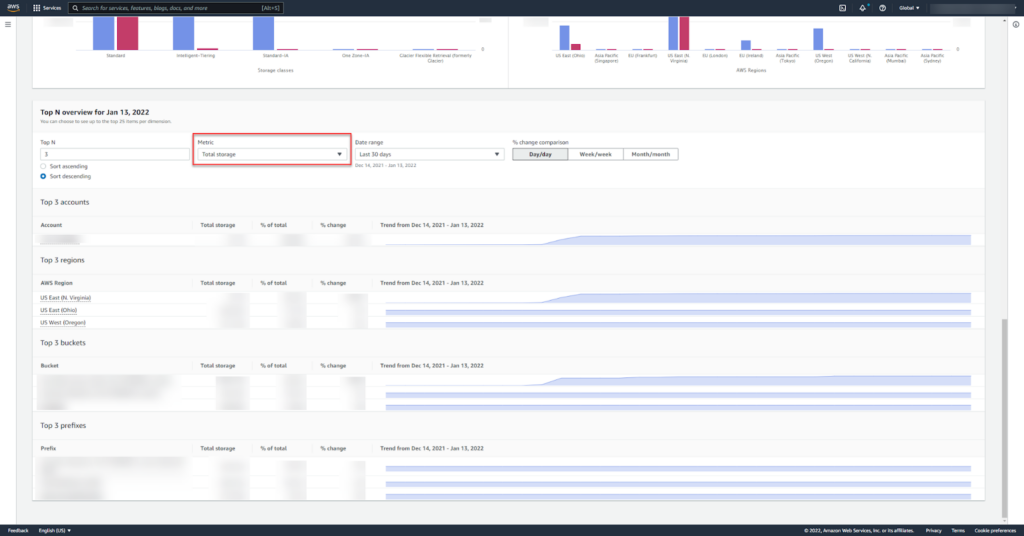

- Scroll down the dashboard to “Top N overview”

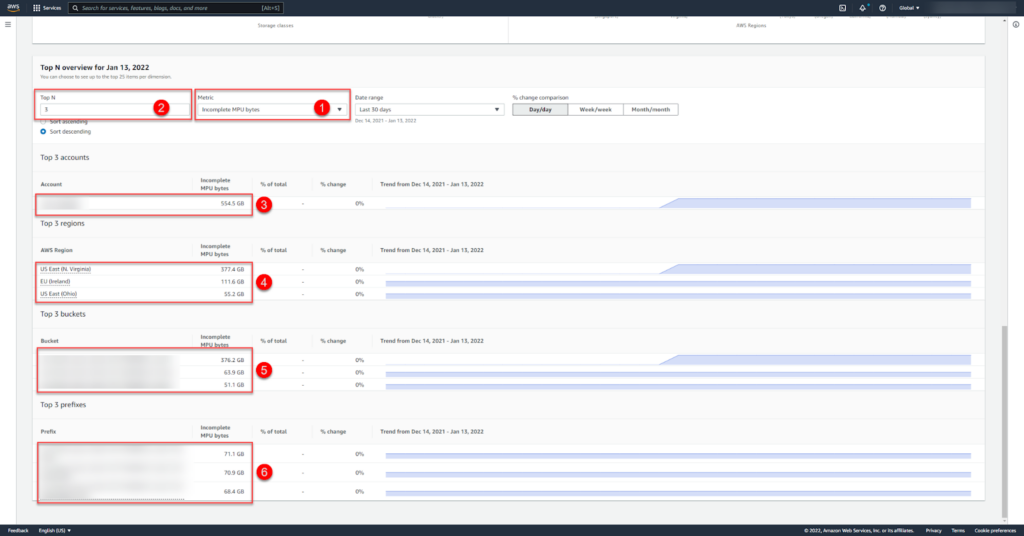

- Change the Metric to “Incomplete MPU Bytes” and adjust the “Top N” to any number of results you wish to study

Note that the total amount in bytes per account of incomplete multipart uploads is shown in box 3. Box 4 shows the same information per AWS region, Box 5 per S3 bucket and Box 6 per bucket prefix.

AWS Management Console Approach

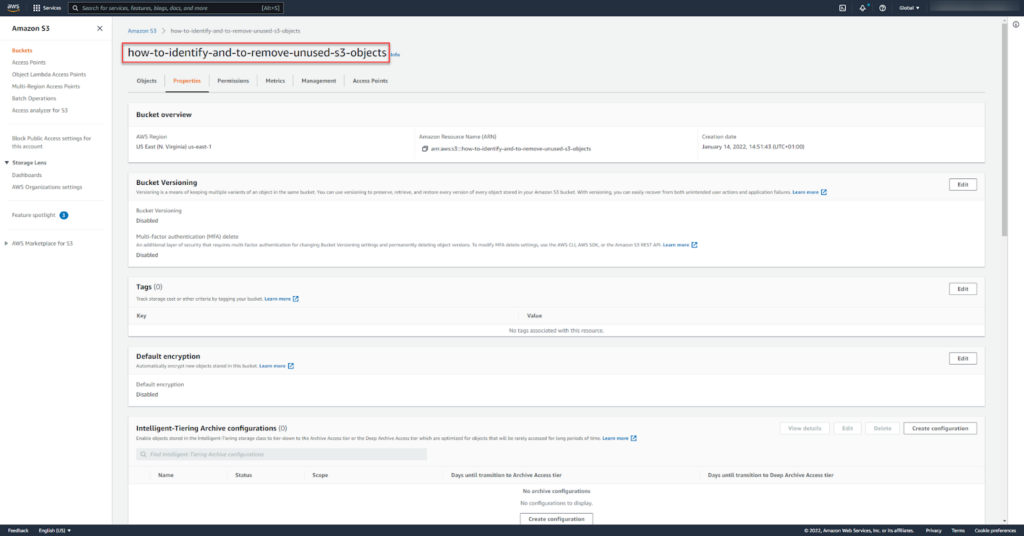

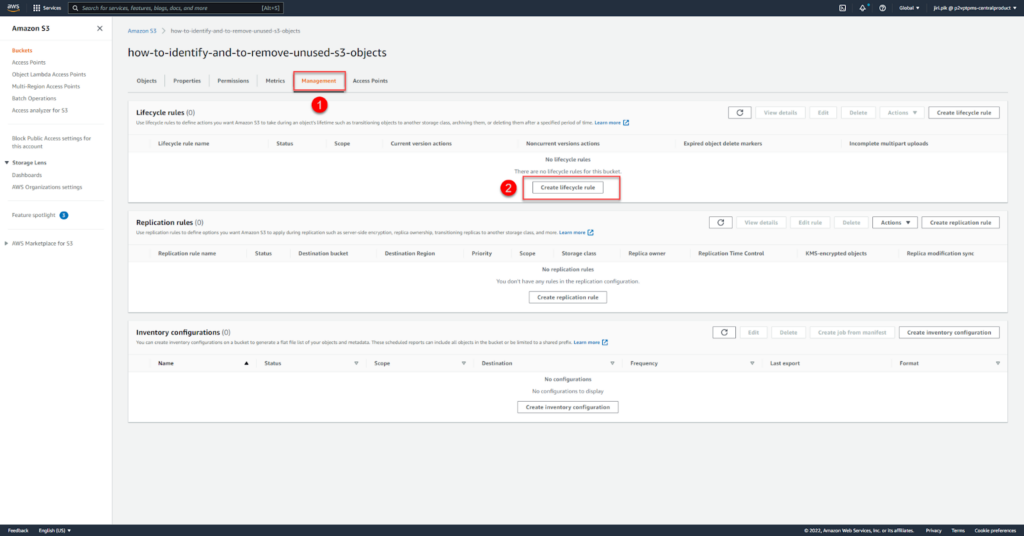

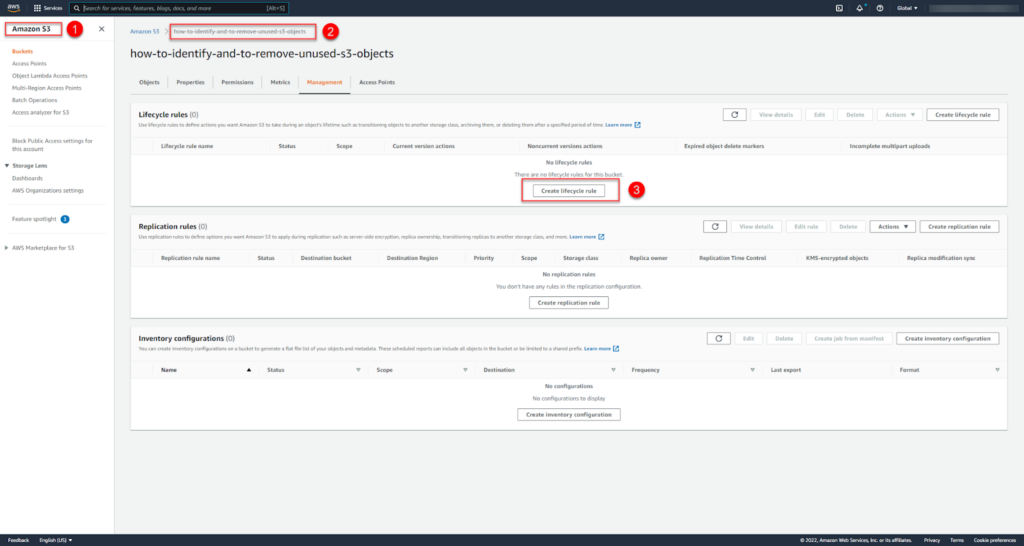

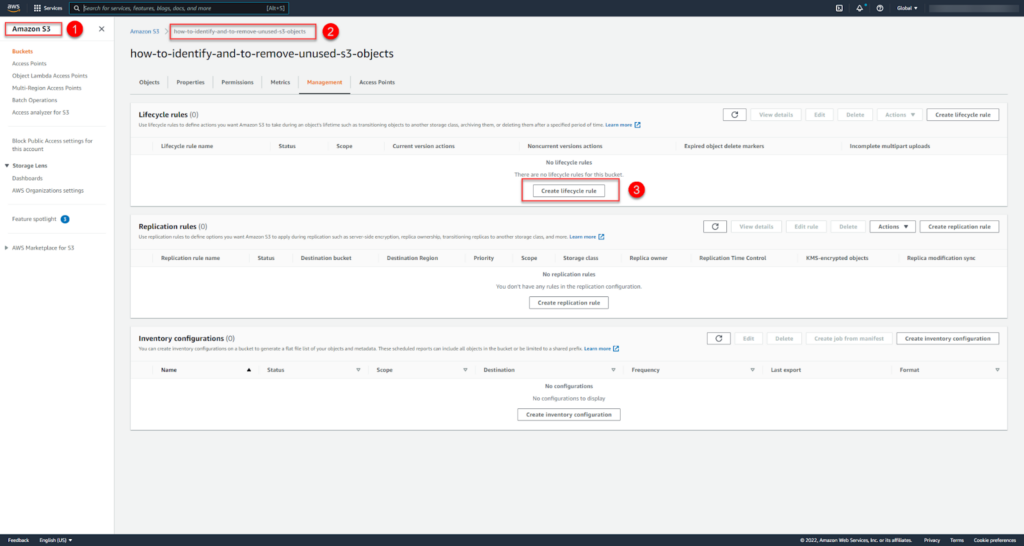

- Open the S3 Bucket’s page in the AWS Management Console

- Change the active tab to Management and click on the button “Create lifecycle rule”

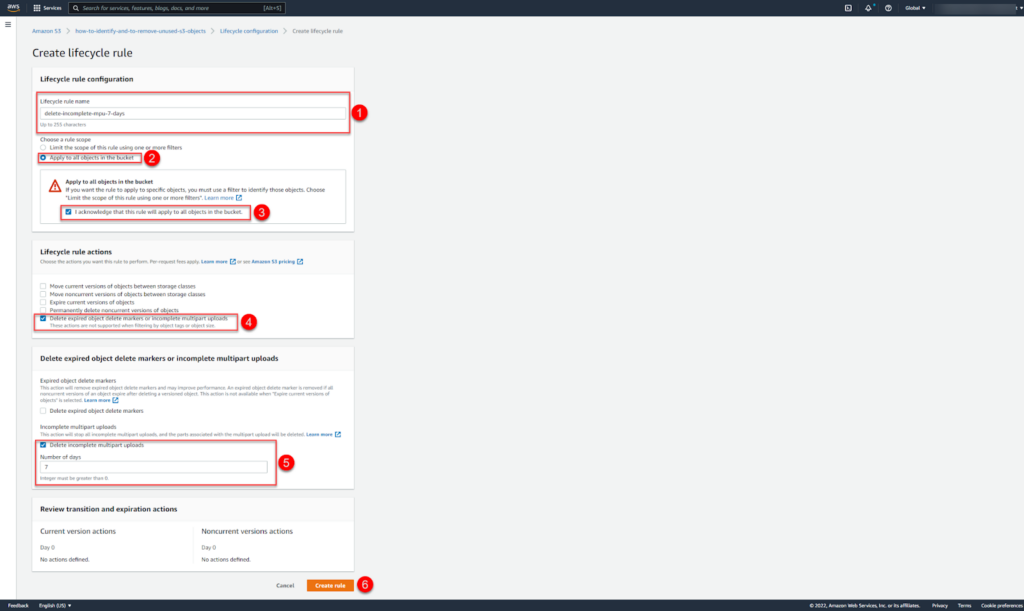

- Create a lifecycle rule delete-incomplete-mpu-7-days as follows:

Old Versions of Versioned Objects and Expired Object Delete Markers

S3 buckets support versioning for all objects, meaning that it is always a possibility to keep multiple variants of the same object in one bucket. This feature targets preserving, retrieving, and restoring every possible version of chosen objects. The use case includes restoring objects post unintentional changes or accommodating application failures.

Opportunity to Optimize

The opportunity to cost optimize in this case is significant for two simple reasons:

- There is no pricing model for versioning on S3: A version will be priced as if it’s another S3 object, meaning the size would determine its cost, computing a significant increase in price on objects when minor changes are the only difference across versions. In that case, a user can cut the price in half by removing unnecessary versions.

- Keeping too many versions of a single object is rarely needed. For most use cases, the noncurrent versions of an object (the old versions) can be safely removed after one year.

DIY

- Open the S3 Bucket in the AWS Management Console

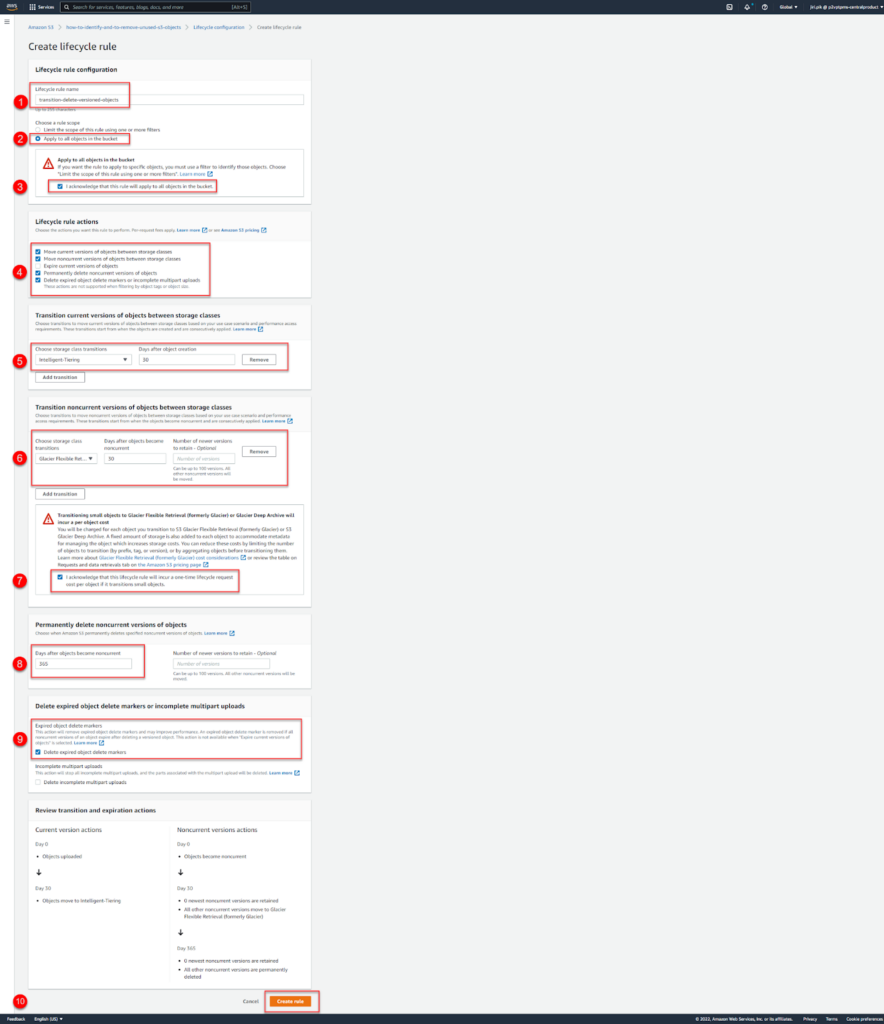

- Create a “transition-delete-versioned-objects” lifecycle rule in order to:

- Transition the current version of any object to the intelligent tiering storage class after 30 days after creation

- Transition noncurrent versions of any object to Glacier Flexible Retrieval storage class after 30 days

- Permanently delete noncurrent versions after 365 days

- Permanently delete expired delete markers – whenever you delete an object in a version-enabled bucket, S3 just creates a delete marker for the object but does not remove the object; an Expired delete marker of an object is the one where all object versions of that object have been already removed.

Note: The task can be accomplished using AWS CLI.

Old Versions of Backups

When using S3 for keeping backups of production systems, similar to versioning, keeping old backups usually brings no value. With backups, the pricing is also relative to sizing, as there are no special pricing criteria in place. For further information on the opportunity to optimize in this case, refer to the said section in “Old Versions of Versioned Objects and Expired Object Delete Markers”.

DIY

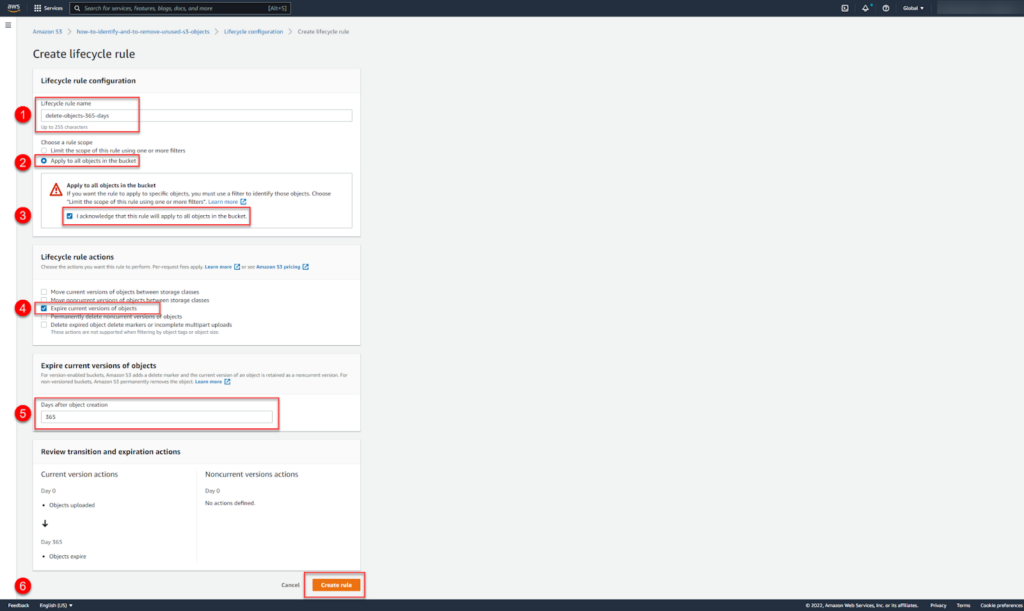

Set Up an S3 lifecycle rule which expires objects after 1 year:

- Open the S3 Bucket in the AWS Management Console

- Set up the lifecycle rule “delete-objects-365-days“ that expires current versions of objects after 365 days

Note: The task can be accomplished using AWS CLI.

Orphaned S3 Objects

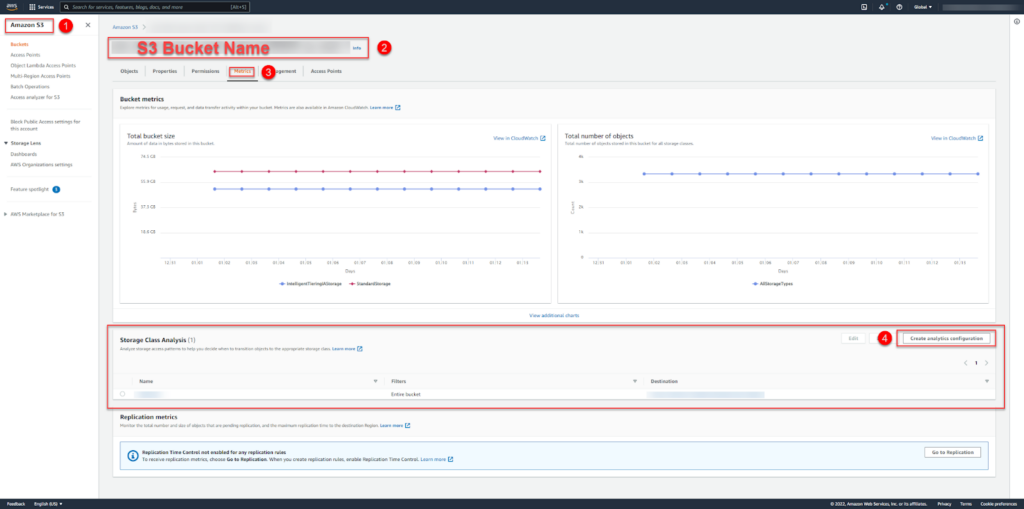

S3 Storage Class Analysis observes data access patterns across entire buckets or specified prefixes/tags and helps identify objects which have not been accessed for a long time.

Opportunity to Optimize

Although S3 Analytics Storage Class Analysis is a chargeable service, the benefits outweigh the costs ($.1 per million objects monitored per month).

A balance should be created to ensure that no required objects are being deleted causing any potential enterprise loss while making sure that unnecessary objects are creating a burden on the bill. Storage Class Analysis can help meet this balance by observing data access patterns and subsequently identifying objects that are no longer in use.

DIY

To set it up, proceed as follows:

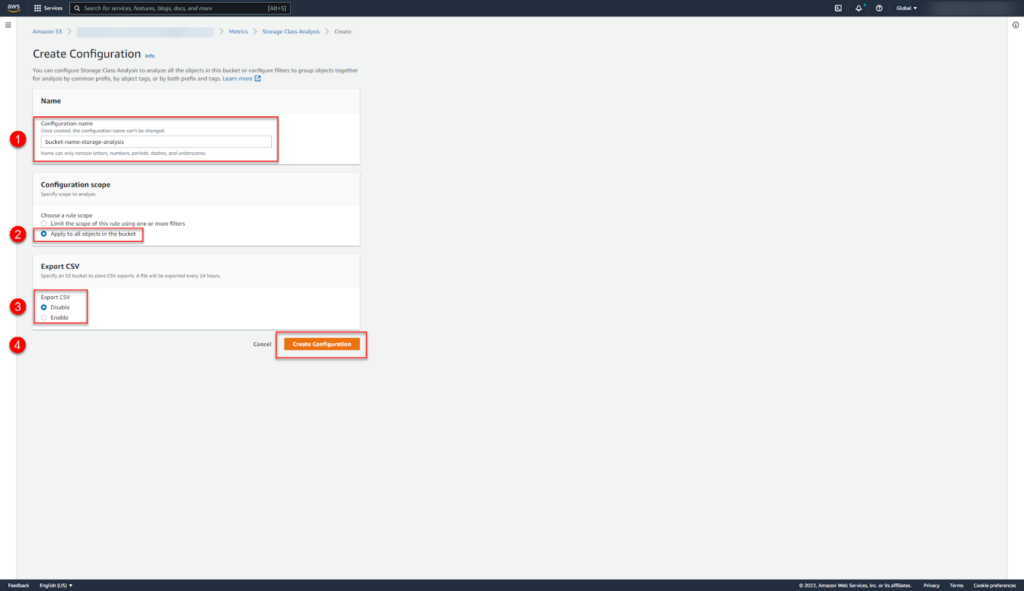

- Open an S3 Bucket page in the AWS Management Console

- Create Analytics Configuration in the Storage Class Analysis Section

Note: The task can be accomplished using AWS CLI.